Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Hands-On GPU Computing with Python: Explore the capabilities of GPUs for solving high performance computational problems : Bandyopadhyay, Avimanyu: Amazon.in: Books

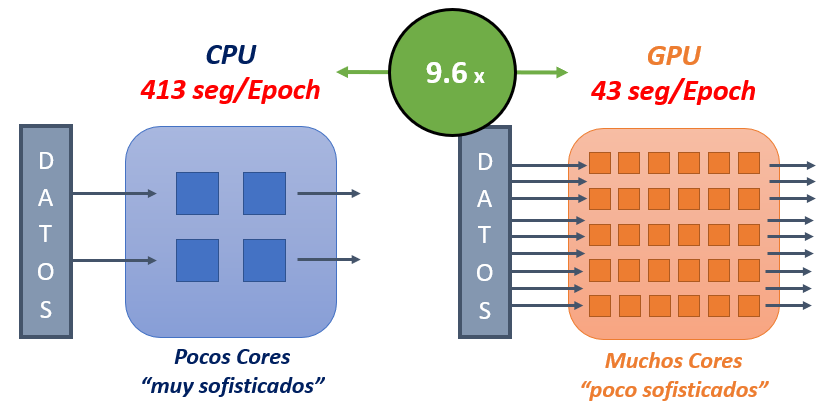

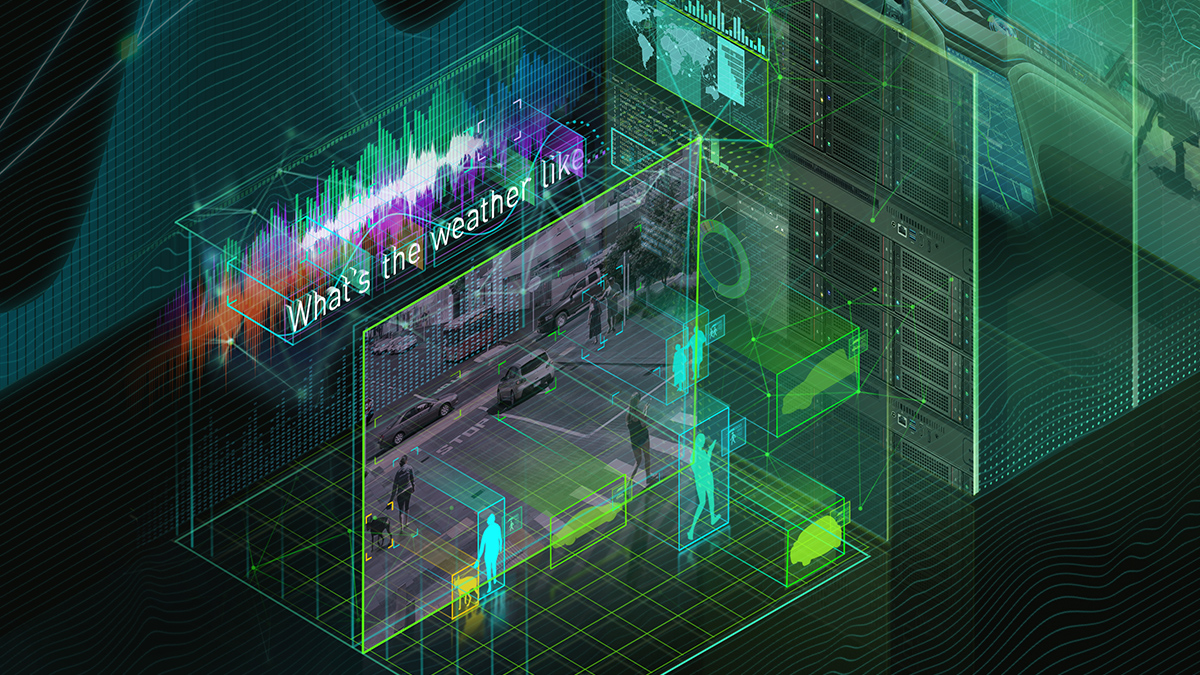

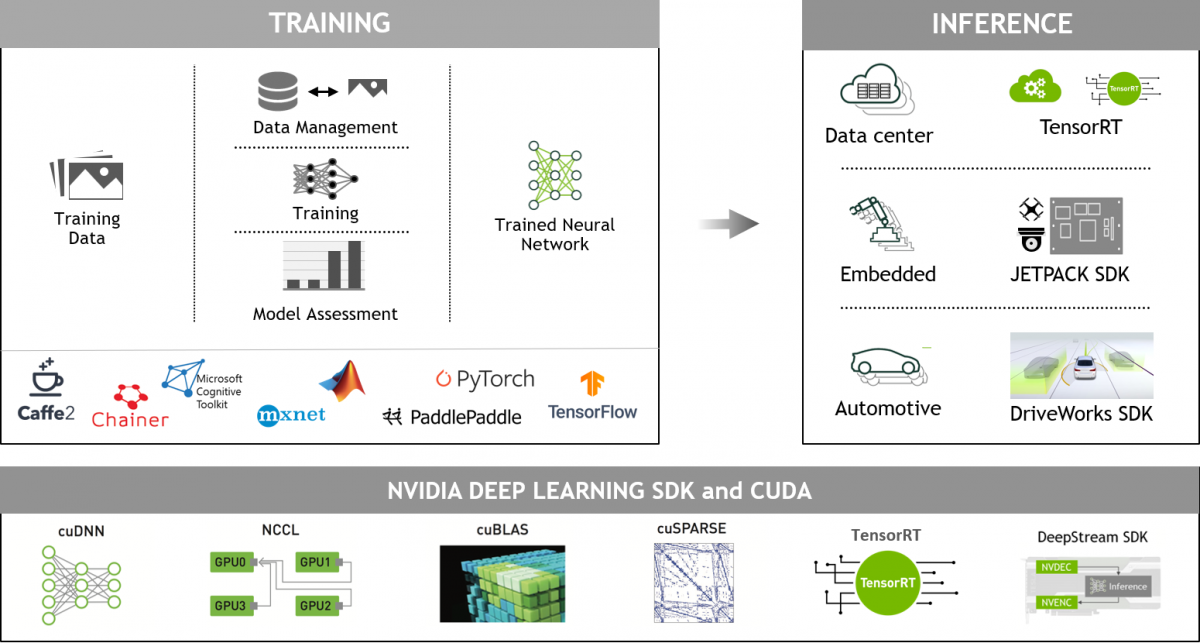

NVIDIA's Answer: Bringing GPUs to More Than CNNs - Intel's Xeon Cascade Lake vs. NVIDIA Turing: An Analysis in AI

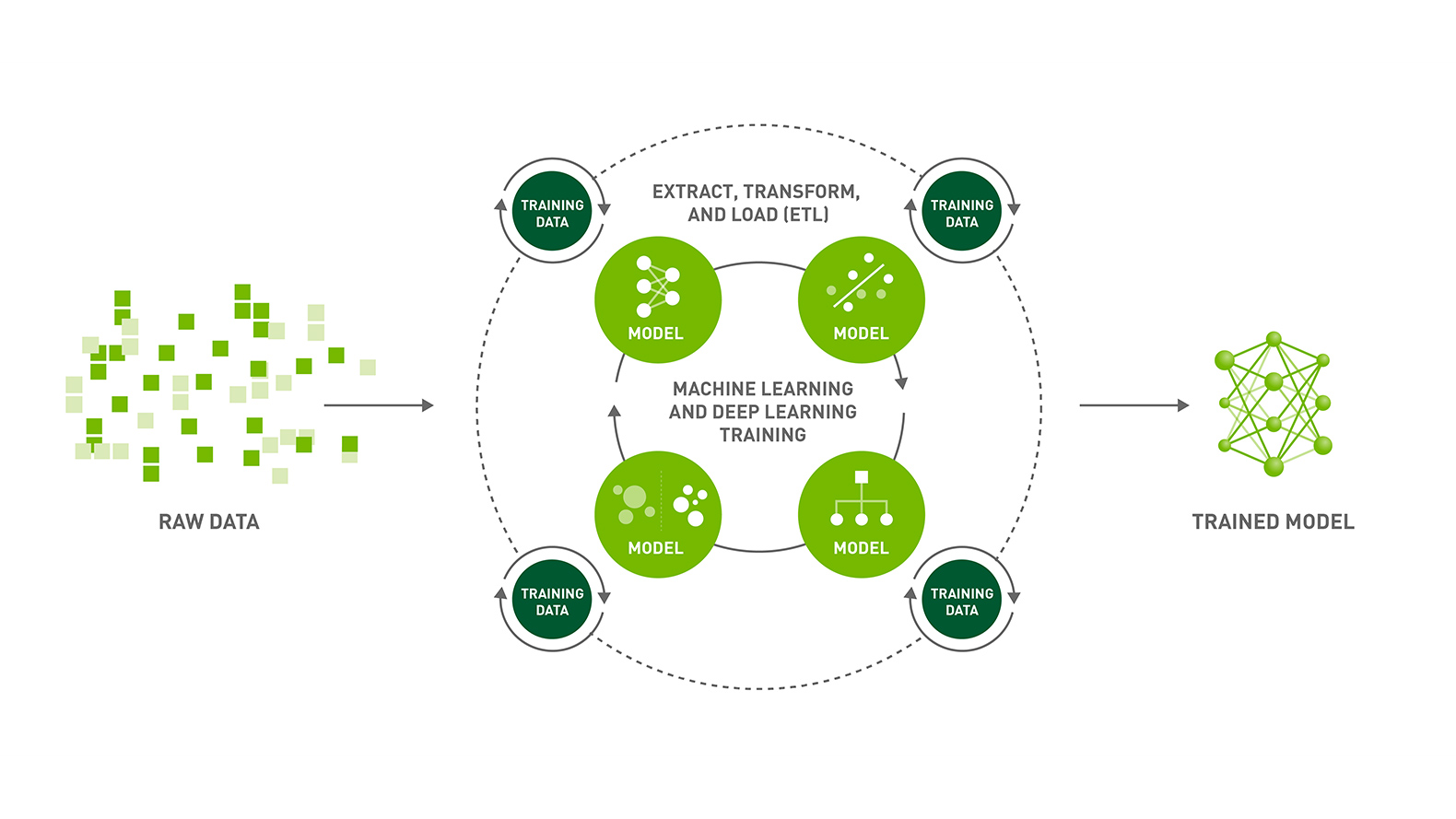

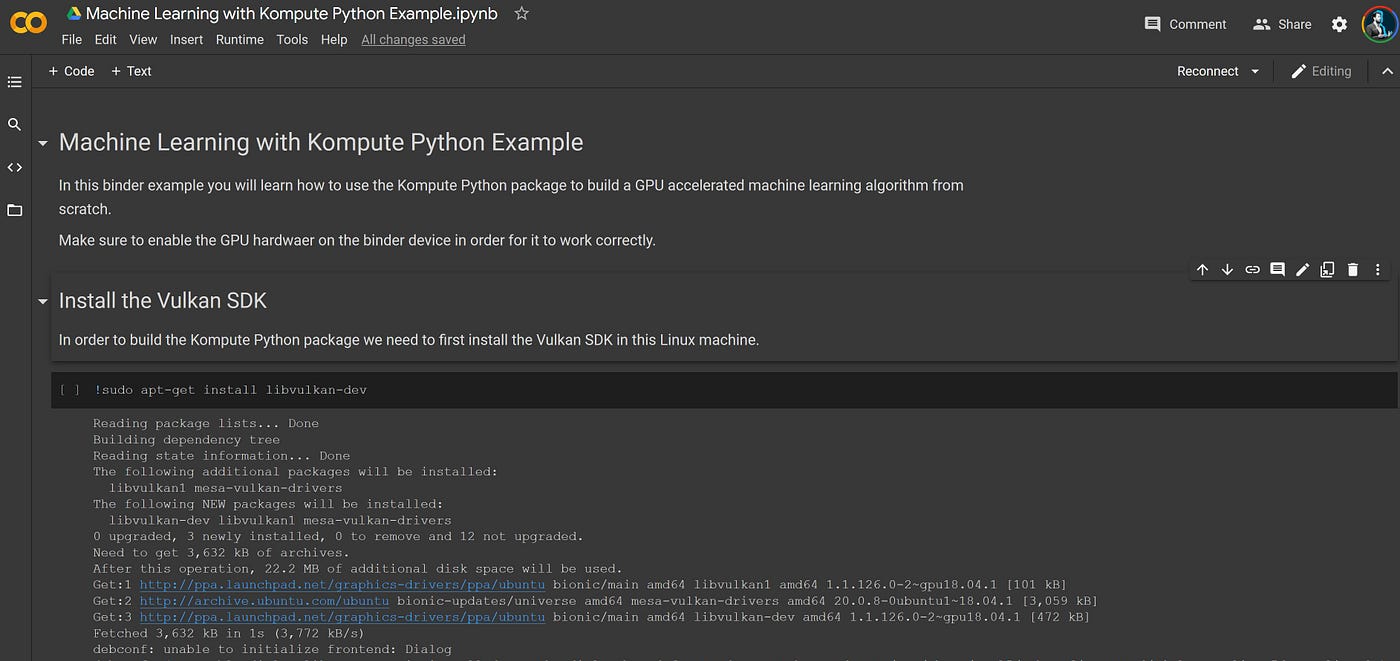

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

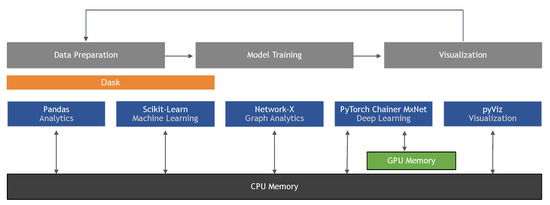

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

GPU parallel computing for machine learning in Python: how to build a parallel computer (English Edition) eBook : Takefuji, Yoshiyasu: Amazon.es: Tienda Kindle

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence